Style Change Detection 2017

Synopsis

- Task: Given a document, determine whether it contains style changes or not.

- Input: [data]

- Evaluation: [code]

- Submission: [submit]

Introduction

While many approaches target the problem of identifying authors of whole documents, research on investigating multi-authored documents is sparse. To narrow the gap, the author diarization task of the PAN-2016 edition already focused on collaboratively written documents, attempting to cluster text by authors within documents. This year we modify the problem by asking participants to detect style breaches within documents, i.e., to locate borders where authorships change.

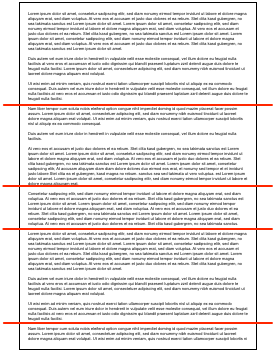

The problem is therefore related to the text segmentation problem, with the difference that the latter usually focus on detecting switches of topics or stories. In contrast to that, this task aims to find borders based on the writing style, disregarding the specific content. As the goal is to only find borders, it is irrelevant to identify or cluster authors of segments. A simple example consisting of four breaches of style (switches / borders) is illustrated below:

Task

Given a document, determine whether it is multi-authored, and if yes, find the borders where authors switch.

All documents are provided in English and may contain zero up to arbitrarily many switches (style breaches). Thereby switches of authorships may only occur at the end of sentences, i.e., not within.

Development Phase

To develop your algorithms, a training data set including corresponding solutions is provided.

For each problem instance X, two files are provided:

problem-X.txtcontains the actual textproblem-X.truthcontains the ground truth, i.e., the correct solution in JSON format:{ "borders": [ character_position_border_1, character_position_border_2, … ] }To identify a border, the absolute character position of the first non-whitespace character of the new segment is used. The document starts at character position 0. An example that could match the borders of the image above could look as follows:

{ "borders": [1709, 3119, 3956, 5671] }An empty array indicates that the document is single-authored, i.e., contains no style switches:

{ "borders": [] }

Evaluation Phase

Once you finished tuning your approach to achieve satisfying performance on the training corpus, your software will be tested on the evaluation corpus. During the competition, the evaluation corpus will not be released publicly. Instead, we ask you to submit your software for evaluation at our site as described below.After the competition, the evaluation corpus will become available including ground truth data. This way, you have all the necessities to evaluate your approach on your own, yet being comparable to those who took part in the competition.

Output

In general, the data structure during the evaluation phase will be similar to that in the

training phase, with the exception that the ground truth files are missing. Thus, for each given

problem problem-X.txt your software should output the missing solution file problem-X.truth.

The output syntax should thereby be exactly like it is described in the training phase section.

Performance Measures

To evaluate the predicted style breaches, two metrics will be used:

- the WindowDiff metric (Pevzner, Hearst, 2002) was proposed for general text segmentation evaluation and is still the de facto standard for such problems. It gives an error rate (between 0 to 1, 0 indicating a perfect prediction) for predicting borders by penalizing near-misses less than other/complete misses or extra borders.

- a more recent adaption of the WindowDiff metric is the WinPR metric (Scaiano, Inkpen, 2012). It enhances it by computing the common information retrieval measures precision (WinP) and recall (WinR) and thus allows to give a more detailed, qualitative statement about the prediction. For the final ranking of all participating teams, the F-score of WinPR will be used.

Note that while both metrics will be computed on a word-level, you still have to provide your solutions on a character-level (delegating the tokenization to the evaluator).

For your convenience, we provide the evaluator script written in Python. It takes three parameters: an input directory (the data set), an inputRun directory (your computed breaches) and an output directory where the results file is written to. Of course, you are free to modify the script according to your needs.

Related Work

- Author Clustering, PAN@CLEF'16

- Marti A. Hearst. TextTiling: Segmenting Text into Multi-paragraph Subtopic Passages.. In Computational Linguistics, Volume 23, Issue 1, pages 33-64, 1997.

- Benno Stein, Nedim Lipka and Peter Prettenhofer. Intrinsic Plagiarism Analysis. In Language Resources and Evaluation, Volume 45, Issue 1, pages 63–82, 2011.

- Patrick Juola. Authorship Attribution. In Foundations and Trends in Information Retrieval, Volume 1, Issue 3, March 2008.

- Efstathios Stamatatos. A Survey of Modern Authorship Attribution Methods. Journal of the American Society for Information Science and Technology, Volume 60, Issue 3, pages 538-556, March 2009.