Style Change Detection 2019

Synopsis

- Task: Is the document written by one or more authors and if, how many authors have collaborated?

- Input: [data]

- Evaluation: [code]

- Submission: [submit]

Introduction

Many approaches have been proposed recently to identify the author of a given document. Thereby, one fact is often silently assumed: i.e., that the given document is indeed written by only author. For a realistic author identification system it is therefore crucial to at first determine whether a document is single- or multiauthored.

To this end, previous PAN editions aimed to analyze multi-authored documents. As it has been shown that it is a hard problem to reliably identify individual authors and their contribution within a single document (Author Diarization, 2016; Style Breach Detection, 2017), last year's task substantially relaxed the problem by asking only for binary decision (single- or multi-authored). Considering the promising results achieved by the submitted approaches (see the overview paper for details), we continue last year's task and additionally ask participants to predict the number of involved authors.

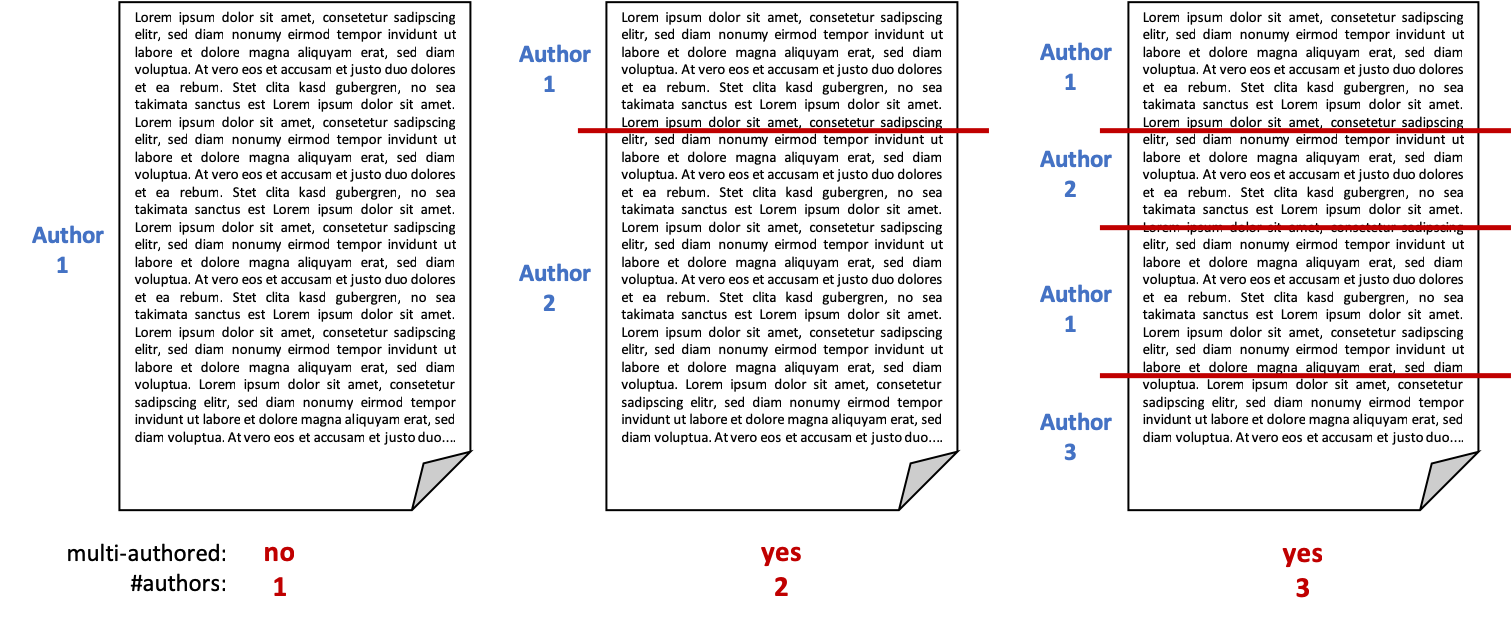

Given a document, participants thus should apply intrinsic style analyses to hierarchically answer the following questions:

- Is the document written by one or more authors, i.e., do style changes exist or not?

- If it is multi-authored, how many authors have collaborated?

The following figure illustrates some possible scenarios and the expected output:

Note that it is irrelevant to identify the number of style changes or the specific positions where the authorships change.

Task

Given a document, determine whether it contains style changes or not, i.e., if it was written by a single or multiple authors. If it is written by more than one author, determine the number of involved collaborators.

All documents are provided in English and may contain zero up to arbitrarily many style changes, resulting from arbitrarily many authors.

Development Phase

To develop your algorithms, a data set including corresponding solutions is provided:

- a training set: contains 50% of the whole dataset and includes solutions. Use this set to feed/train your models.

- a validation set: contains 25% of the whole dataset and includes solutions. Use this set to evaluate and optimize your models.

- a test set: contains 25% of the whole dataset and does not include solutions. This set is used for evaluation (see later).

Like last year, the whole data set is based on user posts from various sites of the StackExchange network, covering different topics and containing approximately 300 to 2000 tokens per document.

For each problem instance X, two files are provided:

problem-X.txtcontains the actual textproblem-X.truthcontains the ground truth, i.e., the correct solution in JSON format:{ "authors": number_of_authors, "structure": [author_segment_1, ..., author_segment_3], "switches": [ character_pos_switch_segment_1, ..., character_pos_switch_segment_n, ] }

An example for a multi-author document could look as follows:

{

"authors": 4,

"structure": ["A1", "A2", "A4", "A2", "A4", "A2", "A3", "A2", "A4"],

"switches": [805, 1552, 2827, 3584, 4340, 5489, 7564, 8714]

}whereas a single-author document would have exactly the following form:

{

"authors": 1,

"structure": ["A1"],

"switches": []

}Note that authors within the structure correspond only to the respective document, i.e., they are not the same over the whole dataset. For example, author A1 in document 1 is most likely not the same author as A1 in document 2 (it could be, but as there are hundreds of authors the chances are very small that this is the case). Further, please consider that the structure and the switches are provided only as additional resources for the development of your algorithms, i.e., they are not expected to be predicted.

To tackle the problem, you can develop novel approaches, extend existing algorithms from last year's task or adapt approaches from related problems such as intrinsic plagiarism detection or text segmentation. You are also free to additionally evaluate your approaches on last year's training/validation/test dataset (for the number of authors use the corresponding meta data).

Evaluation Phase

Once you finished tuning your approach to achieve satisfying performance on the training corpus, your software will be tested on the evaluation corpus (test data set). You can expect the test data set to be similar to the validation data set, i.e., also based on StackExchange user posts and of similar size as the validation set. During the competition, the evaluation corpus will not be released publicly. Instead, we ask you to submit your software for evaluation at our site as described below.

After the competition, the evaluation corpus will become available including ground truth data. This way, you have all the necessities to evaluate your approach on your own, yet being comparable to those who took part in the competition.

Output

In general, the data structure during the evaluation phase will be similar to that in the training phase, with the exception that the ground truth files are missing.

For each given problem

problem-X.txt your software should output the missing solution file

problem-X.truth, containing a JSON object with a single property:

{

"authors": number_of_authors

}Performance Measures

The performance of the submitted approaches will be ranked by a combined measure incorporating both the accuracy of distinguishing single- from multi-author documents and the correctness of the predicted number of authors:

- accuracy will measure the performance of correctly separating single-author (1 author) from multi-author (> 1 author) documents

- The Ordinal Classification Index (OCI) will be used to measure the error of predicting the number of authors for multi-author documents (i.e., only for multi-author documents and not for single-author documents). OCI has been proposed by Cardoso and Sousa in their paper Measuring the performance of ordinal classification. It is computed directly from the confusion matrix and yields a value between 0 and 1, being 0 the best value (perfect prediction).

- The final ranking is finally computed as the average of the classification accuracy and the (inverted) OCI score: $$ \text{rank} = {\text{accuracy} + (1-\text{OCI}) \over {2}} $$

Submission

We ask you to prepare your software so that it can be executed via command line calls. The command shall take as input (i) an absolute path to the directory of the evaluation corpus and (ii) an absolute path to an empty output directory:

mySoftware -i EVALUATION-DIRECTORY -o OUTPUT-DIRECTORYWithin EVALUATION-DIRECTORY, you will find a list of problem instances, i.e., [filename].txt

files.

For each problem instance you should produce the solution file [filename].truth in

the OUTPUT-DIRECTORY For instance, you read EVALUATION-DIRECTORY/problem-12.txt,

process it and write your results to OUTPUT-DIRECTORY/problem-12.truth.

In general, this task follows PAN's software submission strategy described here.

Note: By submitting your software you retain full copyrights. You agree to grant us usage rights only for the purpose of the PAN competition. We agree not to share your software with a third party or use it for other purposes than the PAN competition.

Related Work

- Style Change Detection, PAN@CLEF'18

- Style Breach Detection, PAN@CLEF'17

- Author Clustering, PAN@CLEF'16

- J. Cardoso and R. Sousa. Measuring the performance of ordinal classification. International Journal of Pattern Recognition and Artificial Intelligence 25.08, pp. 1173-1195, 2011

- Benno Stein, Nedim Lipka and Peter Prettenhofer. Intrinsic Plagiarism Analysis. In Language Resources and Evaluation, Volume 45, Issue 1, pages 63–82, 2011.

- Efstathios Stamatatos. A Survey of Modern Authorship Attribution Methods. Journal of the American Society for Information Science and Technology, Volume 60, Issue 3, pages 538-556, March 2009.